Welcome to Research Log #048! We document research progress across the various initiatives in the Manifold Research Group, and highlight breakthroughs from the broader research community we think are interesting in the Pulse of AI!

We’re growing our core team and pursuing new projects. If you’re interested in working together, join the conversation on Discord and check out our Github.

Manifold Updates

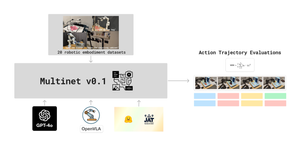

MultiNet: The team is scoping out next steps and milestones for MultiNet, laying the groundwork for dataset preparation to support upcoming additions. We’re also exploring new research directions for future versions, including adapting OpenVLA to OOD OpenX datasets, integrating non-OpenX control datasets, and addressing control datasets with discrete action spaces. Stay tuned for more updates in the coming weeks!

Metacognition in Robotics: We are actively recruiting new members to help accelerate progress! This week, we selected new datasets and models to verify and explore state-of-the-art (SOTA) techniques for model calibration. Additionally, we brainstormed research directions aimed at extending SOTA calibration techniques to multimodal contexts. More information is available in our Discord channel! If you are interested in working on this project, reach out via the Discord channel or apply to the OS Team here.

Swarm Assembly of Modular Space Systems: We are finalizing the problem statement by formulating the dynamics of the system, leveraging the parallel axis theorem for precise derivations. To address the challenge of identifying enclosed spaces within a cubic lattice structure, we are investigating tools and concepts from chemistry and geometry, including the Euler Characteristic and crystal structure analysis. The next step involves proposing an approach for trajectory planning and control input generation to effectively attach the modules into the desired configuration. Read more about this project on our public overview document, which can be accessed here.

Generative Biology: We are enhancing open-source data profiling and performance tracking, recruiting team members to finalize impactful research directions in generative and synthetic biology, and starting a survey paper based on an ongoing literature review. Stay tuned via our Generative Biology discord channel!

Pulse of AI

Edify Image: High-Quality Image Generation with Pixel Space Laplacian Diffusion Models

Researchers at NVIDIA have released Edify Image, a suite of diffusion models which can generate photorealistic images with high precision. They use a novel Laplacian diffusion technique, enabling these models to attenuate image signals across different frequency bands, which opens the door to new and improved applications using text-to-image synthesis, 4K upsampling, and more. These models are a significant leap in terms of their ability to generate high-quality images with enhanced control and efficiency. For those interested in the technical details, the paper is available here.

PaliGemma 2: A Family of Versatile VLMs for Transfer

At Google DeepMind, the development of PaliGemma 2 marks a significant advancement in vision-language models. Researchers integrated the SigLIP-So400m vision encoder with Gemma 2 language models to create PaliGemma 2, which offers variants at 3B, 10B, and 28B parameters with support of image resolutions of 224px, 448px, and 896px. This model enables quicker transfer learning and fine-tuning across various tasks, enhancing its versatility in understanding and generating both visual and textual data. The full paper is here.

Multimodal Autoregressive Pre-Training of Large Vision Encoders:

Apple’s AIMv2 marks a significant advancement in vision models, utilizing an autoregressive pre-training approach. This method enables the model to predict large image patches and text tokens within a set sequence, with improved performance in multimodal understanding benchmarks. AIMv2 shows strong recognition capabilities, with its 3B variant achieving an 89.5% accuracy rate on ImageNet using a frozen trunk, highlighting its effectiveness in visual recognition tasks especially. For more details, the full paper is here.

If you want to see more of our updates as we work to explore and advance the field of Intelligent Systems, follow us on Twitter and Linkedin!