Welcome to Research Log #046! We document weekly research progress across the various initiatives in the Manifold Research Group, and highlight breakthroughs from the broader research community we think are interesting in the Pulse of AI!

We’re growing our core team and pursuing new projects. If you’re interested in working together, join the conversation on Discord and check out our Github.

Manifold Updates

Community Research Call #3: We just hosted Community Research Call #3 which was our biggest one yet! We covered progress across our projects, including Multimodality, Robotics, and MetaCognition, as well as our new focus areas like Generative Biology. The recording for the talk is included below:

And the slides used are publicly viewable here.

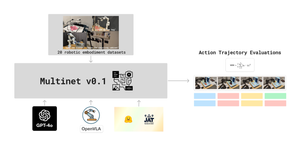

MultiNet: We've obtained initial profiling results for GPT-4 on OpenX and have adapted JAT for zero-shot inference on the platform, with profiling nearly complete on this front. Up next, we're gearing up to kick off the remaining GPT-4 profiling across the entire OpenX dataset. Stay tuned for more updates, and check out the progress at https://github.com/ManifoldRG/MultiNet!

Multimodal Action Models: We're making great progress on the profiling effort of OpenVLA on out-of-training OpenX datasets. Right now, we're focused on adapting OpenVLA to handle OpenX datasets with different action spaces from the ones it was originally fine-tuned on.

Metacognition: We've successfully reproduced benchmark results for LLaMA 3.1 8B on GSM8K and are now working to establish baseline results for system 2 calibration error. Initial experiments indicate a weak but measurable system 2 calibration effect. More information is available in our Discord channel!

Swarm Assembly of Modular Space Structures: We've drafted a public overview document that includes the motivation, technical background, problem formulation, and roadmap, which can be accessed here. Currently, we're exploring existing representations of modular structures and methods for assembling them. You can see all of the literature we’re reviewing on our public zotero.

Foundation Models for Bio: We've outlined the roadmap for 2025, with key milestones set for Q2 and Q4. By Q2, we aim to optimize a BioML open-source model, publish state-of-the-art benchmarking and a BioML dataset repository, and finalize our research directions and team structure. In Q4, we'll focus on publishing results from the BioML model optimization and a synthetic biology survey, actively implementing our research, and solidifying timelines with an emphasis on innovative methodologies. Join the conversation on this project’s discord channel!

Pulse of AI

Wall-E: World Alignment by Rule Learning Improves World Model-Based LLM Agents

WALL-E is an LLM agent which integrates model-predictive control (MPC) and rule-based learning. Unlike previous agents that rely on extensive fine-tuning or large token buggers, WALL-E uses a neural symbolic world model, blending the reasoning power of LLMs with a few key learned rules. This enables the model to align its predictions with real-world dynamics more accurately, enabling more efficient exploration and task completion in open-world environments like Minecraft and ALFWorld. In terms of benchmarks, WALL-E outperforms existing methods, boosting success rates by 15-30% in Minecraft and using fewer replanning rounds, significantly cutting token usage. For those interested in the technical details, the paper is available here.

Aria: An Open Multimodal Native Mixture-of-Experts Model

ARIA is a novel open-source multimodal native AI model that integrates text, code images and video into a single system, providing exceptional performance across a variety of tasks. With a mixture-of-experts architecture activating 3.9B parameters per visual token and 3.5B per text token, ARIA outperforms proprietary models like Pixtral-12B and Llama3.2-11B in multimodal tasks while maintaining lower inference costs. Pre-trained on 6.4T language tokens and 400B multimodal tokens using a rigorous four-stage pipeline, ARIA excels in language understanding, multimodal processing, and long-context comprehension. Released under an open license, its codebase is designed to facilitate real-world applications, supporting easy adaptation and fine-tuning on a wide range of data sources. The full paper is here.

Addition is All You Need for Energy-Efficient Language Models

In response to the high energy demands of large neural networks, this paper introduces L-Mul, a novel algorithm that approximates floating-point multiplication using integer additions. This method significantly reduces computational costs while maintaining high precision. L-Mul achieves greater precision than 8-bit floating-point multiplications while consuming up to 95% less energy for element-wise tensor multiplications and 80% less for dot products. The algorithm integrates well into existing transformer-based models, such as in the attention mechanism, delivering near-lossless performance across various tasks, including language and vision benchmarks. For more details, the full paper is here.

If you want to see more of our updates as we work to explore and advance the field of Intelligent Systems, follow us on Twitter and Linkedin!