Welcome to Research Log #043! We document weekly research progress across the various initiatives in the Manifold Research Group, and highlight breakthroughs from the broader research community we think are interesting in the Pulse of AI!

We’re growing our core team and pursuing new projects. If you’re interested in working together, join the conversation on Discord and check out our Github.

Manifold Updates

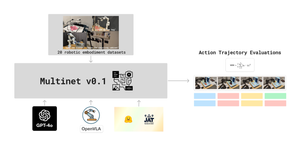

MultiNet: We’ve kicked off the profiling sprint to evaluate several state of the art models on MultiNet! We’ve also open-sourced a script that automatically translates multiple shards of a control dataset in the v0 dataset to TFDS. Additionally, we’re wrapping up the download of the internal version of the datasets for profiling on our cloud instance. Stay tuned for more updates, and join the discussion on Discord!

Multimodal Action Models: We’ve modified the JAT codebase to start getting preliminary inferences on some of the v0 control datasets. Additionally, we’re working on building a prompt engineering framework to test the zero-shot and few-shot capabilities of state-of-the-art VLMs on control/action data.

Metacognition in Robotics: The team is conducting a literature survey on self-evaluation and measuring calibration error in vision language models (VLMs), with plans to extend this work to vision language action models (VLAs) in the future. We're currently searching for robotics-related vision language benchmark datasets to measure the calibration error of VLMs and exploring prompting strategies to lower calibration error. Relevant papers can be found in our zotero public library.

Cooperative Robotics: The team is starting a literature survey to explore answers to the question: “How can swarms of agents adapt to real-time, unexpected changes in their environment and retain or recover mission success?” We will make the survey spreadsheet public soon, so stay tuned for updates!

Pulse of AI

To Code, or Not To Code?: Can including code data in LLM training boost their performance on non-code tasks? Researchers from Cohere find that adding code improves performance on various NLP tasks, including reasoning, world knowledge, and generative quality, with gains up to 8.2%. It highlights the importance of code data quality and quantity, and shows that using code during the cooldown phase enhances overall performance, demonstrating that code is valuable for general LLM capabilities, not just for coding tasks. For those interested in the technical details, the paper is available here.

Efficient, High-quality 3D Mesh Generation: How can we generate high-quality 3D meshes quickly and efficiently from sparse image data? Researchers from UC San Diego, Hillbot Inc., and Zhejiang University have developed MeshFormer to tackle this challenge. MeshFormer creates detailed 3D meshes from just a few multi-view images in seconds, using a combination of 3D native structures, transformers, and convolutional neural networks. This approach significantly reduces input data needs and computing power, outperforming traditional methods that require extensive resources and complex training. MeshFormer can also generate 3D models from single images or text prompts, making 3D asset creation faster and more accessible. You can find more about this method in the paper here.

If you want to see more of our updates as we work to explore and advance the field of Intelligent Systems, follow us on Twitter, Linkedin, and Mastodon!