Welcome to Research Log #042! We document weekly research progress across the various initiatives in the Manifold Research Group, and highlight breakthroughs from the broader research community we think are interesting in the Pulse of AI!

We’re growing our core team and pursuing new projects. If you’re interested in working together, join the conversation on Discord and check out our Github.

Manifold Updates

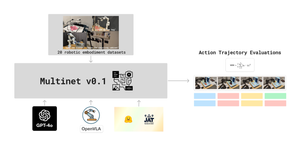

MultiNet: Our team, led by Pranav Guruprasad and Kyle Song, has successfully defined the evaluation metrics that we aim to benchmark several SoTA VLMs and VLAs on.

- Github: https://github.com/ManifoldRG/MultiNet

- Dataset Coverage: https://github.com/ManifoldRG/MultiNet/issues/19

We are setting up infrastructure to kick off profiling this week!

Multimodal Action Models: Our team is preliminarily looking into how we might extend the work we’ve done with Neko using modern VLA architectures, like OpenVLA and Octo. Keep an eye out for updates here, and join the conversation on discord!

Metacognition in Robotics: The MetaCog team has just launched, and is exploring several approaches within meta learning and the broader Metacognitive landscape. More soon!

Cooperative Robotics: Our Cooperative Robotics project has also recently launched! First mentioned in Research Log #041, we’re looking into ways to enable multi-agent robotic systems to cooperate on tasks. We’re starting with an application to cooperative transportation of objects in orbit, and exploring learned inter-agent communication, swarm adaptation to out-of-distribution events, and massive multi-agent deep RL as future directions for this project. If that sounds interesting to you, come join the conversation in our Discord channel!

Foundation Models for Bio: We’ve just kicked off our new project on foundation models for biology applications! It’s currently in an exploratory phase, we’re investigating a few problems including drug discovery, feature detection, protein mapping, and more. If AI for bio excites you, we’d love to have you join the conversation on our channel!

CRC #2: We just hosted our second Community Research Call! The call was jam packed with project updates, new project launches, event announcements and more. We’ve recorded it as well, that video is available here! We’ll have more event announcements in the near future, including hosting guest speakers for research talks and future CRCs, so stay tuned for those!

Pulse of AI

This week, we've seen novel advancements in attention mechanisms, continual learning, and few-shot learning. From more efficient transformer models to novel strategies for retaining knowledge across tasks, the following papers expand the boundaries of what's possible in the field. Here's a brief overview:

Efficient Attention via Partial Decoding: The next evolution of transformers? Researchers have introduced a novel approach to attention mechanisms in transformers that could significantly impact how large language models (LLMs) operate. The new method, "Partial Decoding," reduces computational overhead by selectively processing parts of the input sequence at each layer. This optimization leads to better efficiency, especially in long-sequence tasks where traditional attention mechanisms struggle with quadratic complexity. The proposed approach maintains competitive performance while cutting down computational costs, making it a promising direction for future transformer-based models. The full paper is here.

Exploring Novel Directions in Continual Learning with Transformer Models: In a fresh take on continual learning, researchers have a new strategy tailored for transformer models, enabling them to retain knowledge across tasks without catastrophic forgetting. By introducing a dynamic memory module and adaptive gating mechanisms, this approach allows the model to easily integrate new information while preserving previously learned knowledge. This method addresses one of the core challenges in transformer models—enabling models to learn continuously over time without losing prior skills, also referred to as catastrophic forgetting. The full paper is here.

Advancing the Frontiers of Few-Shot Learning with Adaptive Risk Minimization: Few-shot learning just got a boost from Meta with the introduction of Adaptive Risk Minimization (ARM). This new framework allows models to adapt quickly to new tasks with minimal data by dynamically adjusting the risk associated with different tasks during training. The key finding lies in ARM's ability to generalize across diverse tasks, leading to improved performance in scenarios where only a few examples are available for each new task. This approach has the potential to redefine how we think about model adaptability and transfer learning. The full paper is here.

If you want to see more of our updates as we work to explore and advance the field of Intelligent Systems, follow us on Twitter, Linkedin, and Mastodon!