Welcome to Research Log #041! We document weekly research progress across the various initiatives in the Manifold Research Group, and highlight breakthroughs from the broader research community we think are interesting in the Pulse of AI!

We’re growing our Research Team and pursuing new projects. If you’re interested in working together, join the conversation on Discord and check out our Github.

Manifold Updates!

- New Paper Release! If you didn't hear, we recently released an exciting position paper on LLM based Agents. Check out the announcement and details here.

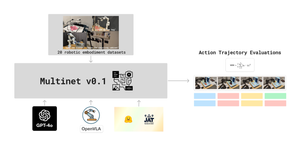

- MultiNet: We've successfully collected the full multinet dataset, covering everything in the V0 spec. The dataset is well over 50tb in size. We're moving on to design the ideal benchmark and evals in preparation for training models. We would like to thank @pranavguru13 for his work on making this possible.

- Cooperative Robotics: The Cooperative Robotics Direction is launching, led by @Sidh. It is in its earlier phases, but seeks to explore learned inter-agent communication. If this is interesting, check out the project overview and join the conversation on discord!

Stay tuned for more project updates soon!

Pulse of AI

This week, a new Flash Attention version just dropped, we now know a way to teach neural networks at run time and finally, an overview of the world of Physical Neural Networks!

Flash Attention 3: The new algorithm to make LLMs just dropped and it’s making models utilize H100s more! Normally, the attention mechanism is the bottleneck for most Language Models. With the new optimizations for the algorithm, the model is capable of running at a whopping 75% of utilization, up from 35% from FlashAttention2. This new algorithm is capable of 1.2 PFLOPS when using FP8, that is a lot of operations. Just as a reference, FlashAttention2 was capable of 225 TFLOPs, that's an entire order of magnitude above for the new version of the algorithm. If you are interested in reading more about it, the original paper is here and there is an approachable thread by Tri Dao right here.

Learning to (Learn at Test Time): RNNs seem to have a resurgence because they don’t have the attention bottleneck and they have unlimited context length. Now, with the ability to have an infinite context length, researchers can make an RNN learn in context by making the hidden state a neural network in and of itself. It's incredibly interesting that you can teach a model in its context and it is going to tangibly affect the output, this is genuine prompt engineering that can affect the model weights! They trained a series of “tiny” models from 125M parameters to 1.3B. If you would like to read more, the paper is right here.

Training of Physical Neural Networks: Have you ever considered that modern models, even at their massive scale, could run on analog hardware? Physical Neural Networks are an underutilized method that uses Analog computing to train models that are faster but to this day has had some trouble to scale to the size of modern deep learning. This paper is a great overview of the current state of PNNs and their different training methods, you can find it right here.

If you want to see more of our updates as we work to explore and advance the field of Intelligent Systems, follow us on Twitter, Linkedin, and Mastodon!