Welcome to Research Log #040! We document weekly research progress across the various initiatives in the Manifold Research Group, and highlight breakthroughs from the broader research community we think are interesting in the Pulse of AI!

We’re growing our Research Team and pursuing new projects. If you’re interested in working together, join the conversation on Discord and check out our Github.

Manifold Updates

MultiNet

MultiNet is creating an omni-modal pre-training corpus to train NEKO and Benchmark other MultiModal Models or Omni-Modal Models.

Over the past few weeks, the MultiNet team has made excellent progress on assembling the first version of our omnimodal pretraining corpus to train generalist, omnimodal models like NEKO.

We’ve completed a comprehensive survey of existing datasets, found alternatives and new tasks to be included, and have documented in the issue found here.

In the coming weeks, we’ll be doing a lot of translating, conversion, and standardization work to make this dataset easily consumable.

If you would like to help in this translation process or if you are interested in training an Offline-RL model, please check out the open source repo in here or chat with us in the discord channel for MultiNet!

NEKO

The NEKO Project aims to build the first large scale, Open Source "Generalist" Model, trained on numerous modalities including control and robotics tasks. You can learn more about it here.

The NEKO team is still working on a fix to improve our perplexity score on the text modality. We are also currently working on getting the vision modality that we are using to an improved state. If you would like to dive deeper into the code of NEKO, you can check it out at the github repo!

New Projects

We’ll be announcing a few new projects very soon! Keep an eye out for news on this.

Pulse of AI

Apple Intelligence

Apple this year at WWDC, finally talked about joining the AI race. They are working on integrating “AI” into their products. They redefined the term AI by changing it to mean Apple Intelligence. Most of the integrations are happening on device and they have a new “private cloud” that is designed for queries that the local devices can’t process.

Apple introduced a new series of foundational models. The basis of these models are trained on their own open source framework, I would have loved to see the training of their models on their own private cloud. This is because they are the only company capable of competing against someone like NVIDIA for that amount of vertical integration (framework + hardware).

Apple is partnering with OpenAI to bring to every single user the power of ChatGPT on device and onto their private cloud, although they are still releasing a new model that is fully trained by them.

The main focus of Apple for this series of models is the idea of just making the end user experience better. It might be possible that it could be a sales driver for future IPhones and Macs.

As for the benchmark results, it seems that the local devices are in the same size range as something like Phi-3-mini. There might be a possibility of a 7B parameter model because the writing benchmarks are compared against those sizes.

The blogpost where they talk about the new on-device AI can be found here. And if you would like to look at WWDC, a recap can be found here.

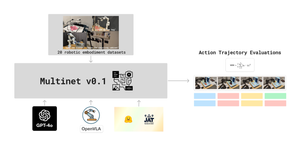

Open-VLA

Open Vision Language Action (VLM) is a new model from Standford+Berkley+(like 6 other unis) of the Prismatic Vision Language Model to specifically be used for Control. This model has a size of 7B parameters and was trained on 970k episodes of the Open-X Embodiment dataset.

Prismatic VLM is a finetune in itself from Llama7B, where they added functionality to translate any input image to embeddings through a projection layer. The new thing that Open-VLM is doing is the addition of the Control de-tokenizer that can generate Action tokens.

The model itself is getting better at generalizing unseen tasks, it absolutely crushes the RT-X series of models and is still better than Octo, another model trained on the open embodiment dataset.

We are starting to see a possible path of models that can generalize actions. The only problem is that we are already saturating tasks like the RT-X dataset. We need more challenging benchmarks that reflect how the models would perform in real life and not just pick an object and place it here.

If you would like to play around with the model, it is available at HuggingFace here, the code is actually open source and it's here, the website has some really nice gifs and can be found here and finally the paper is here.

Depth Vision 2

HKU in collaboration with Tiktok recently released the second version of the Depth Anything series of models. This time, the models are 10x more efficient and you are going to most likely see the improvements directly on the official Tiktok background removal filters.

This series of models are an iteration on the last Depth Anything series. The big challenge that they still face is the data. It drives this current generation of models, and it seems that if you can do iterative improvements on it, the models just keep getting better. This time around, they disregarded a lot of real world data and ended up using a lot of synthetic images because they could generate perfect ground truths for it.

The general pipeline for training is similar to what we saw in the first version. First, you train a large teacher model with purely synthetic images, then you can generate pseudo labels from real images, and finally, you train on the fine-grained details for the student models.

The important part here is the small model, it's incredibly fast. It's getting to the real time territory and it’s good! It has an accuracy of 95.3% and it is so small, just 25M parameters of pure goodness.

We can see that it is still a bit better on the biggest version, but again, the best part about this new model is the training pipeline that has made the data results for training future models an even easier iterative process.

If you would like to read more about this new version of Depth Anything, the paper is here and the github page is right here.

Aurora

Aurora is a new foundational model from researchers at Microsoft that is capable of simulating the atmosphere. This new model has 1.3B parameters and serves as the launching point of smaller finetunes that can be specialized on different tasks like the prediction of air pollution.

The model has three parts, the first one is a 3D encoder that passes the original input data into a representation of the atmosphere, the second and most important one is a Vision Transformer (ViT) + UNET and the third one is a 3D decoder that passes the data back to normal output maps.

One of the findings that seems obvious in retrospect is that they can train on multiple datasets and they had no problem in generalizing across a multitude of them. Normally, this area of science is defined by training an entire model in a single dataset, but Aurora was trained on multiple datasets, this made it capable of outperforming GraphCast by up to 40%.

An interesting tidbit about these models is that scaling laws do apply to them! You can see clearly that the more number of tokens they have processed, generally the better they perform. And the size in parameter count vs accuracy is incredibly good.

The model, sadly, is not open source, but the good thing is that the paper is still open, you can read it right now here.

That’s it for this week folks! See you next time.

If you want to see more of our updates as we work to explore and advance the field of Intelligent Systems, follow us on Twitter, Linkedin, and Mastodon!