Welcome to Research Log #021! We document weekly research progress across the various initiatives in the Manifold Research Group, and highlight breakthroughs from the broader research community we think are interesting in the Pulse of AI!

We’re growing our core team and pursuing new projects. If you’re interested in working together, join the conversation on Discord and check out our Github.

NEKO

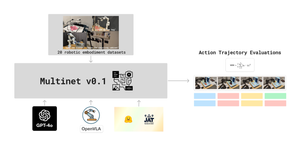

The NEKO Project aims to build the first large scale, Open Source "Generalist" Model, trained on numerous modalities including control and robotics tasks. You can learn more about it here.

- As announced in Research Log #019, NEKO is now training end-to-end on image captioning, VQA, language, and control. We’re doing some minor debugging as we prepare to scale up the training process.

- We’re also looking into new architectural paradigms, like those introduced by Gemini, Mamba, and Fuyu, and investigating multimodal benchmarks to better evaluate performance. We’re even designing our own set of generalist benchmarks! If you are interested in delving deeper, join the discussion or have a look at the data we have been looking at.

Agent Forge

The AgentForge Project aims to build models, tools, and frameworks that allow anyone to build much more powerful AI agents capable of using tools and interacting with the digital and physical worlds.

- We have pushed forward with our Agent Survey, adding several new works from Cognitive Science & RL. The purpose of this survey is to capture ideas from fields like cognitive science to better inform how Large Model based Agents may work, and what architectures/capabilities can be improved. More can be found here.

- Separately, we’re reviewing tool use capability. Currently, we’re looking into the API coverage of tools like Gorilla, as well as the software coverage of tools like Adept’s ACT-2. We aim to build a tool use model capable of both GUI based interaction and programmatic/api interaction. Stay tuned for more updates as this project develops!

Pulse of AI

There have been some exciting advancements this week!

- Mamba: The quadratic scaling of attention in Transformer Models has been a stubborn bottleneck for years in the field of ML. Linearizing this scaling has long been a desired breakthrough. Now, with the introduction of Selective State Space Models, Transformer models can use or ignore context. This is “a very simple generalization of S4”. If you want to have a quick look read the thread from one of the authors or the paper.

- MagicAnimate: Researchers at the Show Lab (University of Singapore)/Bytdance have achieved the generation of "Temporally Consistent Human Image Animation using Diffusion Models." They blew past other methods by using a single reference image of a person paired with animation surpassing the state of the art by over 38%. More can be found on their website, and if you want to try it out there are some spaces from huggingface available.

- Gemini: Google DeepMind has entered the MultiModal arenaby releasing a new model capable of beating GPT-4. This new model was trained from the ground up in text, images, video and audio, and it seems like it will be available next quarter. If you want a deeper look, check their release page.

If you want to see more of our updates as we work to explore and advance the field of Intelligent Systems, follow us on Twitter, Linkedin, and Mastodon!