Welcome to Research Log #017, where we document weekly research progress across the various initiatives in the Manifold Research Group. Also, stick around for the “Pulse of AI'' section, which includes breakthroughs from the broader research community we think are interesting!

We’re growing our core team and pursuing new projects. If you’re interested in working together, join the conversation on Discord.

NEKO

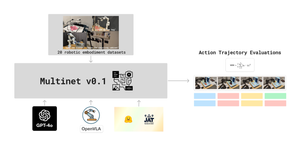

The NEKO Project aims to build the first large scale, Open Source "Generalist" Model, trained on numerous modalities including control and robotics tasks. You can learn more about it here.

- Preliminary MultiModal Experiments: Last week we reported that our model was successfully training on tasks from individual modalities - Image (VQA, Image Captioning) and Language (WikiText). We’ve started testing the model out on small combinations of multiple modalities of Image, Text, and Control/Robotics, and will have some preliminary results to share soon.

- Datasets: Looking into more datasets in the Vision and Language modality to further test our models and potentially exploring establishing a benchmark. This includes taking a closer look at the MiniPile dataset as an alternative to Wikitext. More can be analyzed here.

- Language: Completely integrated Weights and Biases so that we can track correctly the test runs that we do. We are now making the model respect the context length of the prompts it should receive. More can be found through here.

Pulse of AI

- OpenAI Dev Day:: OpenAI Dev day was exciting. In addition to the advancements included w/ GPT4-Turbo, OpenAI seems to be adding further tooling and emphasis on making ChatGPT a full fledged, customizable agents. a new GPT creator and marketplace. More can be found in their DevDay summary.

- MetNet-3: A state-of-the-art neural weather model: Google released this new model that extends significantly both the lead time range and the variables that an observation based neural model can predict for weather forecasting. More can be read in their blog release.

- Adept-AI Releases new experiments: Adept AI has released new experiments. These experiments show how Agents can take over tasks and execute workflows. These models can take over repetitive tasks, hop between tools and even order dinner. This is super exciting, and may be the first set of models/agents that show more complex tool use! More can be read through here.

If you want to see more of our updates as we work to explore and advance the field of Intelligent Systems, follow us on Twitter, Linkedin, and Mastodon!